EA Forum Lowdown: April 2022

What if the EA Forum digest was a tabloid instead of a weather report?

Imagine a tabloid to the EA Forum digest's weather report. This publication gives an opinionated curation of EA forum posts published during April 2022, according to my whim. I probably have a bias towards forecasting, longtermism, evaluations, wit, and takedowns.

You can also see this post on the EA Forum here.

Index

How did we get here?

Monthly highlights

Finest newcomers

Keen criticism

Underupvoted underdogs

How did we get here?

Back and forth discussions

On occasion, a few people discuss a topic at some length in the EA forum, going back and forth and considering different perspectives across a few posts. There is a beauty to it.

On how much of one’s life to give to EA

Altruism as a central purpose (a) struck a chord with me:

After some thought I have decided that the descriptor that best fits the role altruism plays in my life, is that of a central purpose. A purpose can be a philosophy and a way of living. A central purpose transcends a passion; even considering how intense and transformative a passion can be. It carries a far deeper significance in one’s life. When I describe EA as my purpose, it suggests that it is something that my life is built around; a fundamental and constitutive value.

Of course, effective altruism fits into people’s lives in different ways and to different extents. For many EAs, an existing descriptor adequately captures their perspective. But there are many subgroups in EA for whom I think it has been helpful to have a more focused discussion on the role EA plays for them. I would imagine that a space within EA for purpose-driven EAs could be particularly useful for this subset, while of little interest to the broader community.

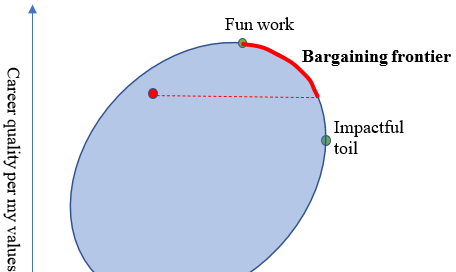

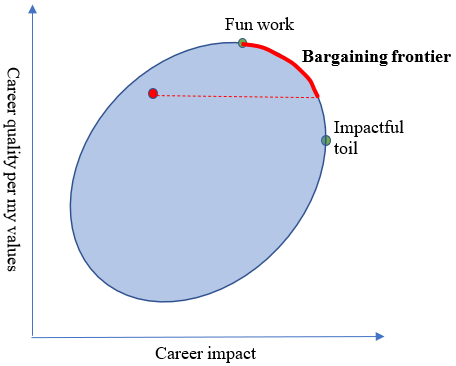

In contrast, Eric Neyman's My bargain with the EA machine (a) explains how someone who isn't quite as fanatically altruistic established his boundaries in his relationship with the EA machinery:

I’m only willing to accept a bargain that would allow me to attain a higher point than what I would attain by default – but besides that, anything is on the table.

...

I really enjoy socializing and working with other EAs, more so than with any other community I’ve found. The career outcomes that are all the way up (and pretty far to the right) are ones where I do cool work at a longtermist office space, hanging out with the awesome people there during lunch and after work.

Note that there is a tension between creating inclusive spaces that would include people like Eric and creating spaces restricted to committed altruists. As a sign of respect, I left a comment on Eric’s post here.

Jeff Kaufman reflects on the increasing demandingness in EA (a). In response, a Giving What We Can (GWWC) pledger explains that he just doesn't care (a) all that much what the EA community thinks about him. He writes (lightly edited for clarity): "my relative status as an EA is just not very important to me... no amount of focus on direct work by people across the world is likely to make me feel inadequate or rethink this... we [GWWC pledgers] are perfectly happy to be by far the most effectively altruistic person we know of within dozens of miles".

On decadence and caution

I found the contrast between EA Houses: Live or Stay with EAs Around The World (a) and posts such as Free-spending EA might be a big problem for optics and epistemics (a) and The Vultures Are Circling (a) striking and amusing. Although somewhat forceful, the posts presenting considerations to the contrary probably didn’t move the spending plans already in motion.

Personal monthly highlights

I appreciated the ruthlessness of Project: bioengineering an all-female breed of chicken to end chick culling (a)

Luke Muehlhauser writes Features that make a report especially helpful to me (a). I thought this was a valuable reference piece that aspiring researchers should read. I also liked Tips for conducting worldview investigations (a), where Luke mentions that blog posts and short reports of less than 100 pages are not that useful to him.

Leo writes an update to the Big List of Cause Candidates (a), which gathers many of the novel cause area ideas people have been suggesting on the EA forum since early 2021.

The Simon Institute writes an Update on the Simon Institute: Year One (a). Despite their, at times, verbose writing, I found the post to be excellent. Ditto for an update on CSER.

Of the April fools (a) posts, Half Price Impact Certificates (a) tickled my brain. The post offers £20 worth of impact for £10, and notes that "the certificates are a form of stable currency, as they are pegged to the British pound, and so the impact isn't subject to volatility."

Case for emergency response teams (a) suggests creating a standing reserve which could quickly deploy in times of crisis. It is part of a longer series (a).

Nicole Noemi gathers some forecasts about AI risk (a) from Metaculus, Deepmind co-founders, Eliezer Yudkowsky, Paul Christiano, and Aleja Cotra's report on AI timelines. I found it helpful to see all these in the same place.

Aaron Gertler rates and reviews rationalist fiction (a).

The Forecasting Wiki (a) launches (a)

Finest newcomers

Tristan Cook develops and presents a highly detailed model of the likelihood of us seeing "grabby aliens" (a).

mpt7 writes Market Design Meets Effective Altruism (a) analyzing the charity ecosystem from a market perspective. The standpoint in the post could be a fruitful perspective from which to generate new mechanisms that EA might want to use.

Evan Price writes Organizations prioritising neat signals of EA alignment might systematically miss good candidates (a).

In my career I have worked for 18 years as a structural engineer and project manager in senior roles at small, niche companies. I've been fully remote for several years, have worked with teams on five continents, and have always worked hard to excel as a people manager and to produce rigorous technical work and robust, efficient systems under challenging conditions.

Through EA volunteering work and other recent non-EA work I have been assessed as experienced, engaged, and self-directed in my operations work. I received a single response from the six applications and was removed from consideration there before an interview.

... it does seem like there could be an incongruity here - I do have several years of relevant experience, I am strongly EA aligned, and I didn't receive responses from five applications.

Leftism virtue cafe (a)'s shortform is very much worth reading. I particularly enjoyed:

Riffing on NegativeNuno, totally unknown persons created a Pessimistic Pete (a) Twitter account and a Positive Petra (a) EA forum alias.

Ryan Kidd asks: How will the world respond to "AI x-risk warning shots" according to reference class forecasting? (a). I thought it was interesting:

If such a warning shot occurs, is it appropriate to infer the responses of governments from their responses to other potential x-risk warning shots, such as COVID-19 for weaponized pandemics and Hiroshima for nuclear winter?

To the extent that x-risks from pandemics has lessened since COVID-19 (if at all), what does this suggest about the risk mitigation funding we expect following AI x-risk warning shots?

Do x-risk warning shots like Hiroshima trigger strategic deterrence programs and empower small actors with disproportionate destructive capabilities by default?

rileyharris (a) posts their thesis about normative uncertainty. I'm on the fanatical utilitarianism side, so I just read the summary in the introduction, but I thought it was worth a shoutout.

Keen criticism

Rohin Shah (a) rants about bad epistemics on the EA forum:

Reasonably often (maybe once or twice a month?) I see fairly highly upvoted posts that I think are basically wrong in something like "how they are reasoning", which I'll call epistemics. In particular, I think these are cases where it is pretty clear that the argument is wrong, and that this determination can be made using only knowledge that the author probably had (so it is more about reasoning correctly given a base of knowledge)...

niplav argues for rewarding Long Content (a), and criticizing the recent $500k blog prize. Uncharacteristically enough, it received a thoughtful answer (a) by the target of his criticism. Niplav then retracted his post.

DID THEY NOT SEE THE IRONY OF PUTTING OUT A PRIZE LOOKING FOR BLOGS FOCUSED ON LONGERMISM WITH POSTS THAT STAND THE TEST OF TIME, BUT EXCLUDE ANY BLOG OLDER THAN 12 MONTHS? AM I GOING CRAZY?

Forethought Foundation researcher pummels popular (a) Climate Change alarmist.

I appreciated aogara (a)'s criticisms of FTX spending on publicity (a) and the BioAnchors Timelines (a)

billzito (a) talks about whether having "despair days" set aside for criticism would be a good idea and shares some struggles with knowing whether criticisms are welcome.

Underupvoted underdogs

Go Republican, Young EA! (a, 69 upvotes): "Young effective altruists in the United States interested in using partisan politics to make the world better should almost all be Republicans. They should not be Democrats, they should not be Greens, they should be Republicans."

Josh Morrison calls for research (a, 40 upvotes) for an ambitious biosecurity project: getting the US to issue an Advanced Market Commitment for future covid vaccines.

Thus both the campaign’s object-level goal (accelerating coronavirus vaccines) and meta-goal (expanding the long-term use of advanced market commitments) have major EA benefits beyond reducing COVID disease burden.

In Avoiding Moral Fads? (a, 23 upvotes), Davis Kingsley introduces the concept of a moral fad, gives previous examples (recovered memory therapy, eugenics and lobotomy), and asks about what the EA community could do to not fall prey to moral fads.

Using TikTok to indoctrinate the masses to EA (a, -5 upvotes). Although the post's title is terrible, the videos (a) referred to struck me as both witty and edgy. The author also has 14k followers and 1M likes on TikTok, which seems no small feat.

Effective Altruism Isn't on TV. (a, 11 upvotes): "Internet Archive lets you search the TV captions of major news programs back to 2009... Here’s how often effective altruism and charity evaluators were mentioned... RT (Russia Today) provided the most mentions of effective altruism.."

Jackson Wagner asks about What Twitter fixes should we advocate, now that Elon is on the board? (a). In the comments, Larks suggests "federated censorship" (a). Wagner suggests play-money prediction markets (a), perhaps by acquiring Manifold Markets.

Virtue signalling is sometimes the best or the only metric we have (a, 34 upvotes). I thought this was a good point.

A Preliminary Model of Mission-Correlated Investing (a, 27 upvotes):

According to my preliminary model, the altruistic investing portfolio should ultimately allocate 5–20% on a risk-adjusted basis to mission-correlated investing. But for the current EA portfolio, it's better on the margin to increase its risk-adjusted return than to introduce mission-correlated investments.

I appreciated this summary (a) of The case for strong longtermism.

I also appreciated Replaceability versus 'Contextualized Worthiness' (a). It seems like a valuable concept for those who struggle with the thought of being replaceable. Sadly, the post doesn't have an upvote button.

Adam Shimi opens An Incubator for Conceptual Alignment Research Bets (a, 37 upvotes): "I’m not looking for people to work on my own research ideas, but for new exciting research bets I wouldn’t have thought about."

Note to the future: All links are added automatically to the Internet Archive, using this tool (a). "(a)" for archived links was inspired by Milan Griffes (a), Andrew Zuckerman (a), and Alexey Guzey (a).

Effective Altruism and petty drama [emoji of a lama]

— @Kirsten3531 (a)